In 2023, I joined a UC Berkeley team selected for NASA’s SUITS challenge. We were among the 11 top groups chosen by NASA to design an AR software on HoloLens to aid lunar missions. This project is still developing, more animated graphics will be provided soon in May 2024.

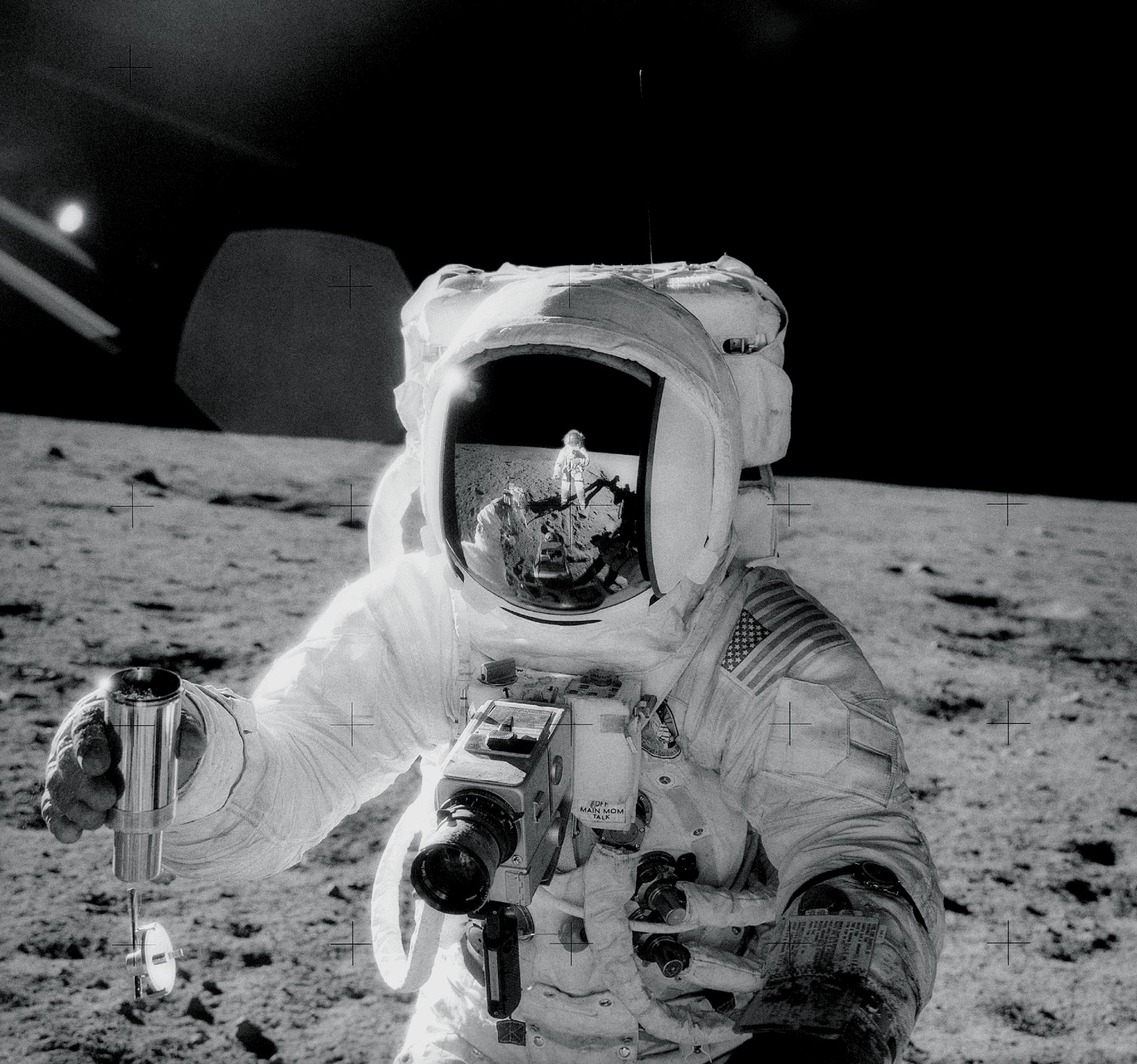

Astronauts need to complete a series of tasks on the moon, but they face numerous physical and cognitive limitations due to the challenging environment.

Restricted Movement

Multitasking Limitations

Safety Concerns

Limited Visibility

Opportunities

✨

How might we help astronauts explore the moon under physical and cognitive limitations using AI and AR technology?

Design Highlights

LLM-Powered Responsive Voice Assistant

Ursa's voice assistant is enhanced with Large Language Models (LLM), offering astronauts tailored instructions and feedback

Non-intrusive Interfaces

Ursa features non-intrusive interfaces strategically placed in the user's peripheral vision, ensuring essential information is accessible without obstructing the lunar environment

Design Process

Context

Who is our user?

Astronaut

Heavy things on the hand

Astronauts often need to carry heavy equipment in their hands, which can hinder their ability to operate system with hands.

Harsh lunar environments

The extreme conditions on the moon require astronauts to continuously monitor their suits' signals and surrounding environment. Consequently, any system used must minimize cognitive load to prevent overburdening the astronauts and ensure their focus remains on critical tasks.

What's the NASA’s Tasks

Task 1 - Navigation

Task 2 - Egress (Prepare suits for outdoor tasks)

Task 3 - Geo Sampling

Task 4 - Control Rover

Task 5 - Communicate between LMCC and Astronaut

Explore

I first created 2D prototypes to see how the requirements would function on a digital screen.

To avoid confusing feedback for different needs, I identified three key interaction models through inspection.

I also built an interactive prototype with voice flow to demonstrate the voice assistance feature.

Final Design

Principle 1

Provide actionable insights, not just raw data.

Don’t

User

: “Ursa, what’s my heart rate?”

Ursa

: “Your heart rate is 90 bpm.”

This provides only the number without any interpretation or guidance.

Do

User

: “Ursa, what’s my heart rate?”

Ursa

: “Your heart rate is 90 bpm {heart_rate}, and it’s within the nominal {nominal} range.”

Here, it gives the heart rate and informs the astronaut that it’s within a safe range.

Principle 2

The system should dynamically recognize and interpret alternative words that convey the same intent.

We used a structure

Intent

+

Utterance

+

Alternative Slot

to breakdown users’ command

Notes

Intent: The objective of voice interaction

Utterance: How the user phrases a command

Slot: Required or optional variables

Intent

User wants to move to the next task

Utterance

User

: “Hi Ursa!”

The system is activated by the unique call-sign "Hi Ursa," chosen to avoid accidental triggers in regular conversations.

Alternative Slot

User

: “Move to next task”

Users uses different word should be able to activate same command, such as “Next” , “Finished”, “Task 2”, or etc

Principle 3

Use visual feedback to assist communication

Use visual feedback to enhance communicaton

Designing VUI to avoid ambiguity

Clear, precise voice commands are crucial to prevent misunderstandings. We focused on creating intuitive prompts and responses to ensure users received accurate feedback from the AR assistant

Balancing 3D AR and 2D design

Designing for 3D AR environments differs greatly from 2D screens. We learned that eye distance and spatial perception require special attention to ensure comfortable, immersive interactions.

Contact Me!

yanishi1221@gmail.com